This week, I asked some people how they seek care and most of the time it’s some combination of the following:

Recommendations from an immediate social network of friends, family, and co-workers;

Convenience of access (e.g. how close is it, how soon can I get care, and do they take my insurance?);

Reputation (e.g. hospital marketing, being mentioned in the news, or well-known/prestigious university).

Did you notice how nowhere in there is a mention of what we typically consider healthcare quality, such as how safe, effective, or patient-centered the care is?

Silently crying because I’m in that bucket.

In 2010, the Agency for Healthcare Research & Quality (AHRQ), published a series of research reports on public reporting of health outcomes. Specific challenges they highlighted included the idea that:

Consumers don’t know there’s a quality gap in outcomes between hospitals and clinicians;

Patient-Clinician Disconnect: The whole concept of what clinical experts consider quality (complications, readmissions, mortality) isn’t the same of what patients consider quality (affordability, doctor’s credentials, and access);

Clinical quality concepts are pretty hard to understand and aren’t meaningful to patients ( a higher length of stay might be a signal that a hospital is good because they can stay as long as they want);

Clinical quality data is hard cognitive work and consumers don’t want to do that weighting in their mind.

Why Rankings?

That gets to the big issue of why hospital rankings are so important. The whole idea of ranking is designed to give consumers a single, interpretable touchpoint for choosing care, rank-ordered for them to choose where to get care.

The most prestigious of US hospital rankings is probably US News & World Report “Best Hospitals” list, which ranks hospitals by specialty, includes an honor roll of hospitals with the most number of highly ranked specialties, and provides local ratings for common procedures and conditions. Recently, Washington Monthly and the Lown Institute also published a new ranking.

In 2011, Malcolm Gladwell published a New Yorker article reviewing the concept of consumer rankings, particularly the US News & World Report “Best Colleges” guide.

Sorry for triggering some of you out there

Gladwell did a good job critiquing the concept of rankings, including:

the fact that the idea of using various factors were often proxies of ‘quality’ altogether;

weighting of factors was made by explicit value judgements, including an overemphasis on selectivity,;

“reputational ratings are simply inferences from broad, readily observable features of an institution’s identity, such as its history, its prominence in the media, or the elegance of its architecture. They are prejudices;”

Excluding the idea of ‘price’ or value.

Overall, Malcom’s ideas is particularly encapsulated in this line:

“Rankings are not benign. They enshrine very particular ideologies...who comes out on top, in any ranking system, is really about who is doing the ranking. ”

Overview of Rankings

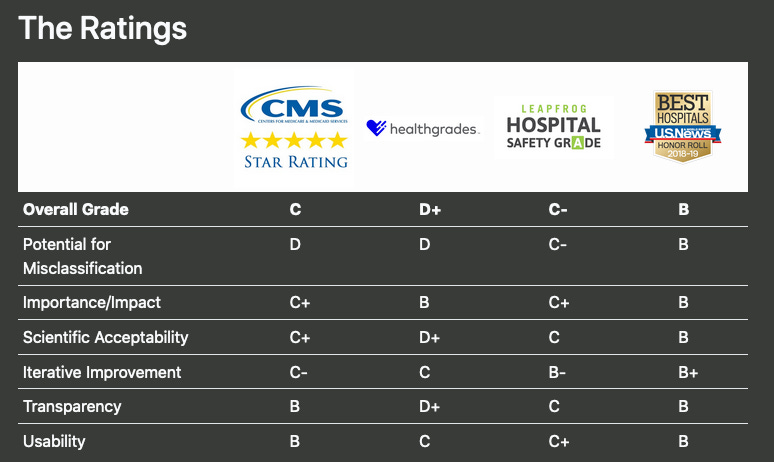

With a new ranking system, I thought it might be interesting to break down a few popular hospital ranking systems and how they’re rating and judging hospital quality.

Unsurprisingly, hospitals are generally critical of these rankings, but primarily point to issues of methodology.

The hospital health-services researcher’s idea of throwing shade.

But first, “gentlemen, a short view back to the past.”

Nikhil is the healthcare meme master so I’ll just settle for niche Formula 1 memes…

In 2001, the AHRQ also released a report called Crossing the Quality Chasm that broke down the idea of quality into 6 big domains:

For clarity, I thought it would be useful to sort out exactly some of the ideologies that various rating systems have using these 6 domains.

Safety

Some of the interesting takeaways is that the majority of these rankings all focus on safety as a core concept. Ratings like Leapfrog specifically took this route in seeking to create rankings where patients are kept ‘free of harm.’

Effectiveness

Others emphasize ‘Effectiveness’ but mostly assume that readmissions are a good proxy for tha, since the procedure might not have been entirely successful if a patient needed to go back to a hospital for an unplanned visit. I think there’s a greater opportunity for Patient-reported Outcome Measures where hospitals can track function and symptoms over time, specifically tracking if patients exceed a threshold for minimal improvement. Mandatory tracking is already using in some countries including the UK and Norway.

Accessibility

Accessibility seems like an afterthought for most of these rankings and ratings, mostly from ED throughput. This is a tougher concept since some hospitals don’t employ their physicians, where the true sign of access limitations include whether or not the doctors take your insurance (hello, surprise billing!) and the time to the next appointment can be weeks or months in the future.

Person-Centeredness

Person-centered care seems mostly focused on measures of patient satisfaction using the standard HCAHPS survey. James Gardner shared an interesting resource on using social media sentiment, but that’s just a similar data point with less time-lag. I think the real signs of person centered care should be if care is aligned with a patient’s preferences and values, using standard measures for Shared Decision-Making and goal concordance.

Efficiency

Efficiency, is a great concept I think, and only CMS and the Washington Monthly/Lown Institute even tracks the concepts of over-use. Embold Health is a company that I’ll keep my eyes on in terms of how they role out their measures to a wider audience or seek NQF endorsement. I’m not exactly sure why length of stay or profit margin is something for IBM Watson (nee Truven) to track. CMS has some interesting measures as well here that track expected spending vs observed spending over an episode of care as well, but it’s a shame its not used for their rating systems.

Equity

Finally on Equity, I really do need to applaud the Washington Monthly/Lown Institute for paying attention to this topic in terms of inclusivity, pay equity, and community benefit. I’m looking forward to more systems trying to tie this in.

What would I do?

So what would I do if there was a perfect ranking system. A few points:

1. Mandating or incentivizing PROM collection to track effectiveness

2. Measuring the time to a next appointment and incidence of surprise billing to track accessibility

3. Adding measurements of shared decision making perceptions and goal concordance for elective surgeries to track patient-centered care

4. Tracking disparities of health outcomes between socio-economic classes, inclusivity of patient population, and community benefit to equity.

Thanks for reading! What do you think would make the best rating system? How do you think we can get people to better understand the consequences of where they choose the receive care? How should this framework be applied to physician measures? Let me know at kevinwang@hey.com (yeah, I jumped on the bandwagon).